Overview

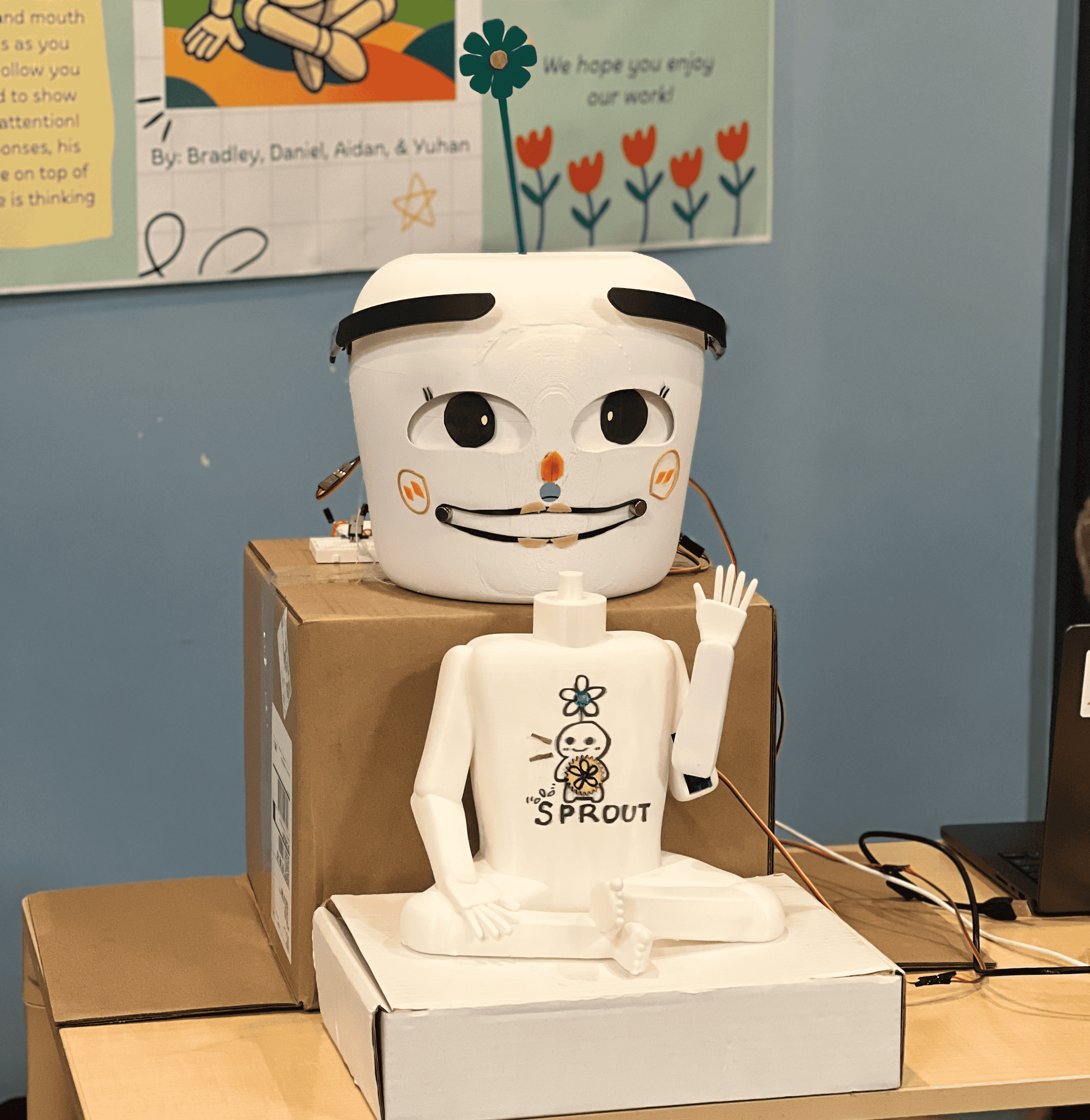

Sprout is a physical therapeutic robot that mirrors users’ facial expressions in real time to support emotional self-awareness and rehabilitation. Inspired by the cultural concept of mirror therapy — the idea that we recognize parts of ourselves through others — Sprout brings this reflective process into the tangible world through coordinated servo-driven movements and live emotion detection.

Problem Space

Mirror therapy, rooted in both physical rehab and cultural psychology, helps people recognize emotional blind spots — behaviors or feelings we only become aware of when reflected by others. While it’s used clinically to treat conditions like phantom limb pain, its potential for emotional reflection through tangible interaction remains largely untapped.

We asked: What if a robot could act as an emotional mirror — helping people reflect by physically reflecting them?

The Goal

Our goal was to translate the abstract concept of mirror therapy into a physical, emotionally expressive robot — one that could help users recognize and process their own emotions through real-time facial mirroring. To make that possible, we focused on three key goals:

- Create a system that reflects users' facial expressions to encourage emotional self-awareness and rehabilitation.

- Replace screen-based emotion displays with coordinated, servo-driven movements — including eye tracking, moving eyebrows, and a mouth.

- Use soft, rounded forms and familiar gestures to create an emotionally safe and inviting presence.

TL;DR: We set out to embody the cultural concept of mirror therapy in a physical robot that could reflect users' emotions in real time. Our goal was to create a robot that could not only recognize emotion but physically mirror it back through synchronized, expressive movement — all while feeling friendly and approachable.

We began with hand-drawn sketches to explore how a robot might physically mirror human expressions using familiar forms. Our early concepts focused on facial elements like eyes, mouth, and brows — all expressive features we could translate into servo-driven motion. We also wanted to incorporate playful, non-human gestures like a tail or arm wave to reinforce Sprout’s friendliness.

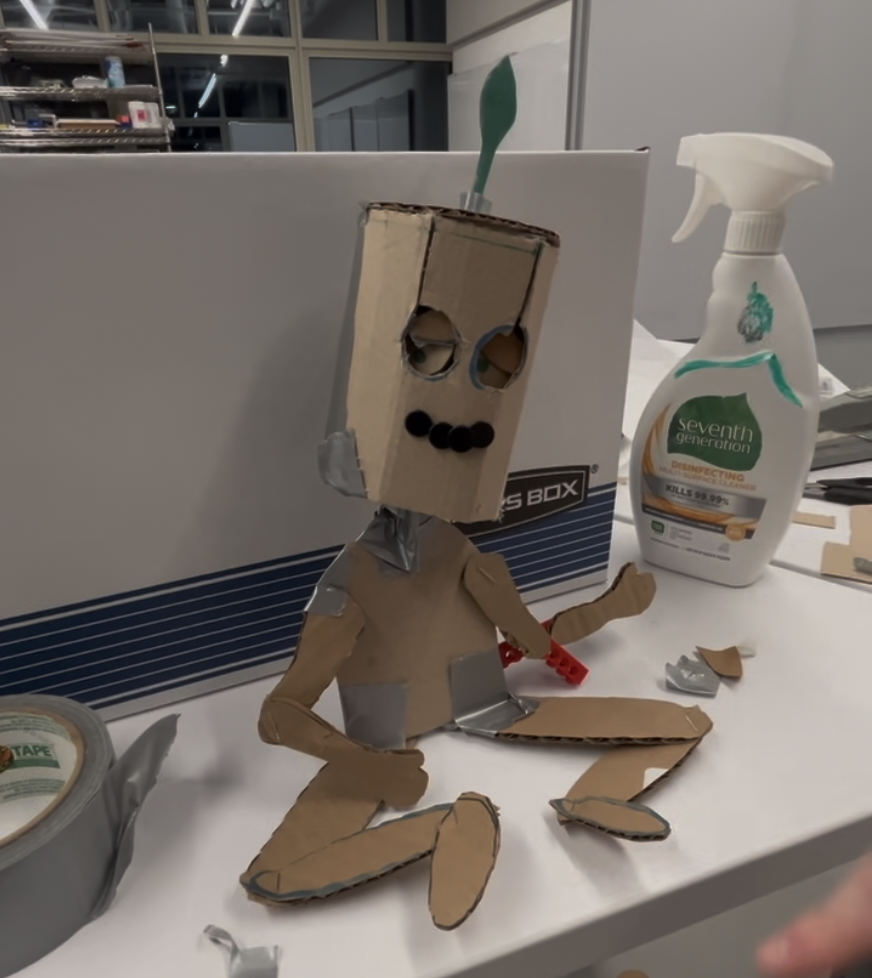

Low-Fidelity Prototypes

We began with a series of cardboard mockups to quickly explore Sprout’s physical proportions and internal space constraints. These low-cost prototypes helped us get a sense of the overall volume needed to hold all the servos while remaining compact and approachable. Within the first iteration, we discovered a core challenge: standard servo motors were significantly larger than we thought, especially when considering the required range of motion and wiring we'd need. This forced us to scale up Sprout’s head and begin thinking about the servo layout and internal partitioning early on.

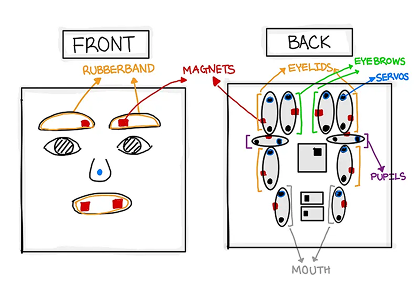

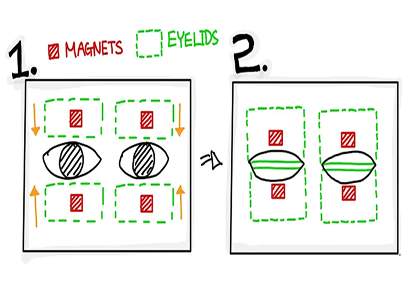

We experimented with magnet-tension mechanisms, using fishing line and scrap plastic to simulate the movement of facial features. The servos, placed outside the head for testing, pulled small magnets embedded in the mouth and eyebrows to create the expressions. These early tests let us fine-tune the motion geometry and control the tension force before committing to a more permanent material. We found, for example, that pulling the inner eyebrow vertically was more expressive than moving the entire brow laterally — a small detail that became a defining feature of Sprout’s final expressiveness.

To improve realism with the movements, we laser-cut custom servo horns and gear attachments, giving us finer control over facial articulation.

Emotion Detection Setup

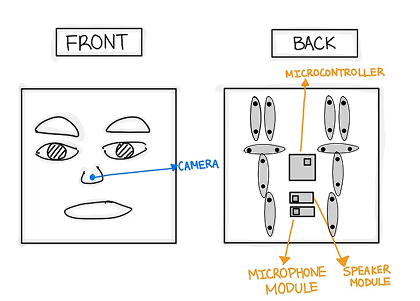

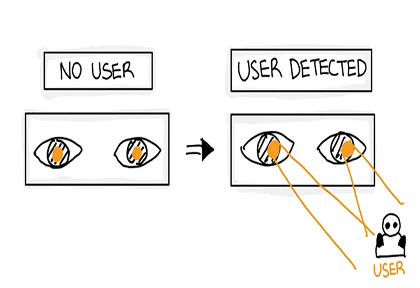

Once the foundational mechanics were working, we turned to real-time emotion detection — the other half of Sprout’s mirroring system. Our primary goal here was to achieve live responsiveness with as little lag as possible, so Sprout could reflect emotion fast enough to feel reactive, not robotic.

We used the Hume AI streaming API, which allowed us to detect over 20 nuanced emotional states from a live camera feed. For simplicity and clarity, we collapsed these into five core emotion states: joy, sadness, anger, surprise, and neutral. This mapping allowed for expressive range while keeping the servo logic manageable.

We implemented a Python-based listener script that continuously received emotional classification data, then sent clean, structured commands to an Arduino microcontroller via PySerial. The Arduino was programmed to parse these commands and trigger pre-defined movement routines for Sprout’s servos. Each emotional state corresponded to a unique combination of motor movements. For example, a joyful expression might raise both eyebrows, lift the corners of the mouth, and cause the antenna to wiggle gently.

Check out GitHub for the code.Mid-Fidelity Prototypes

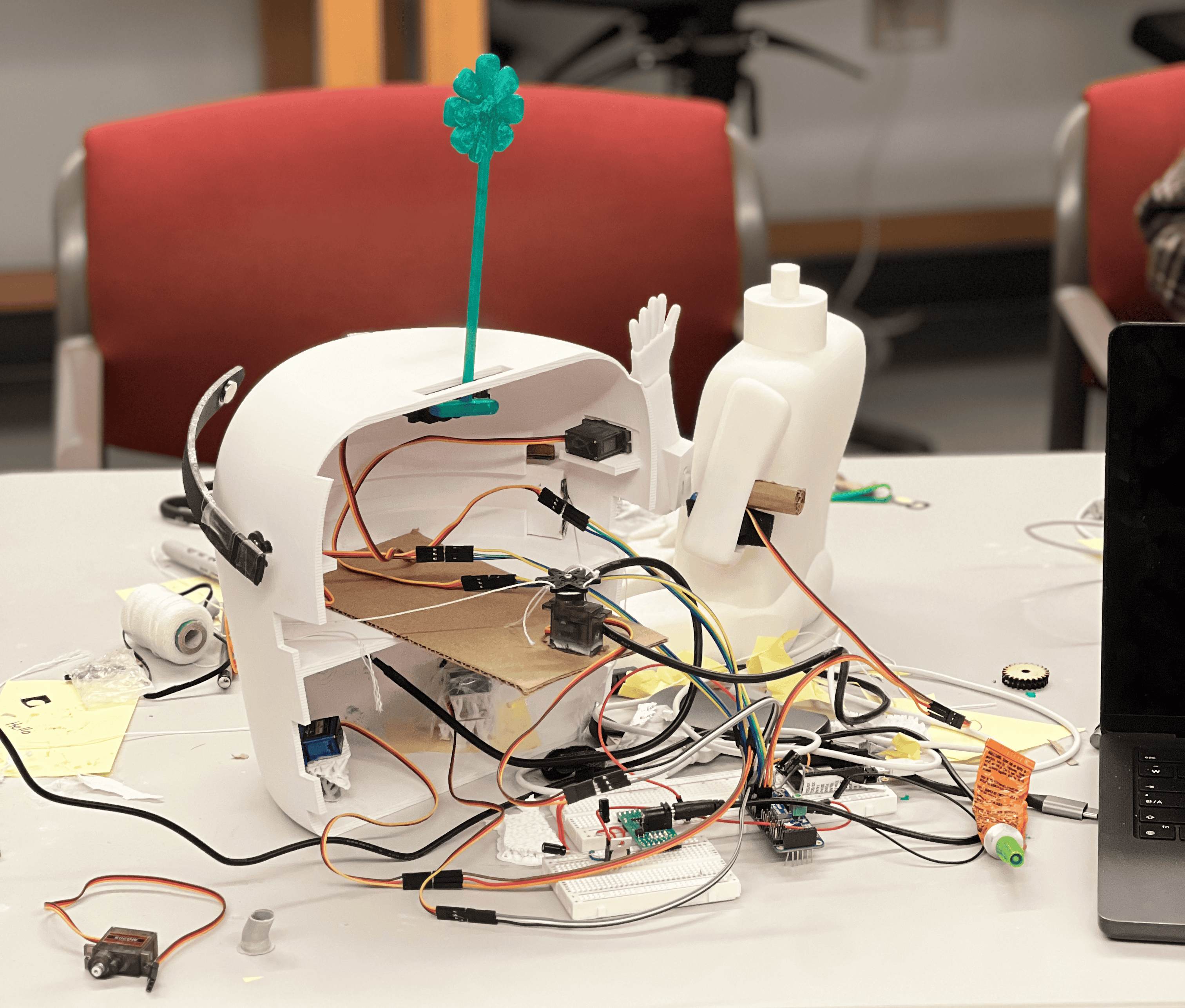

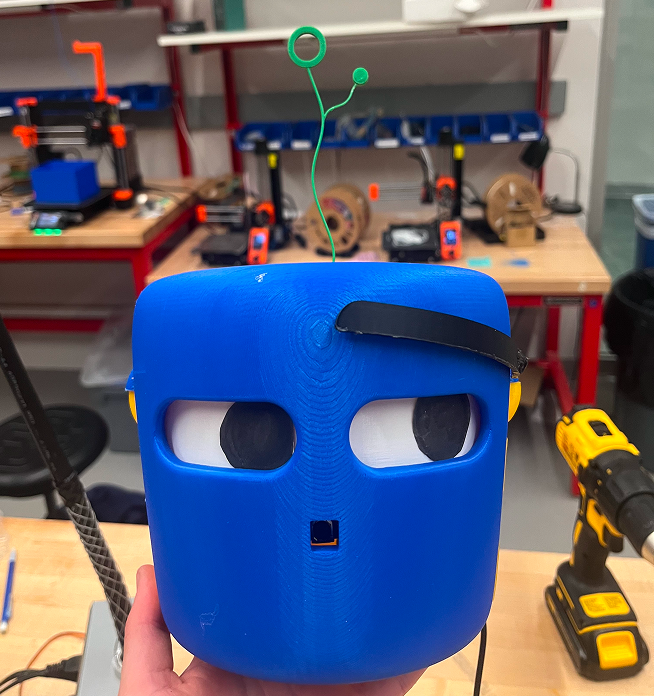

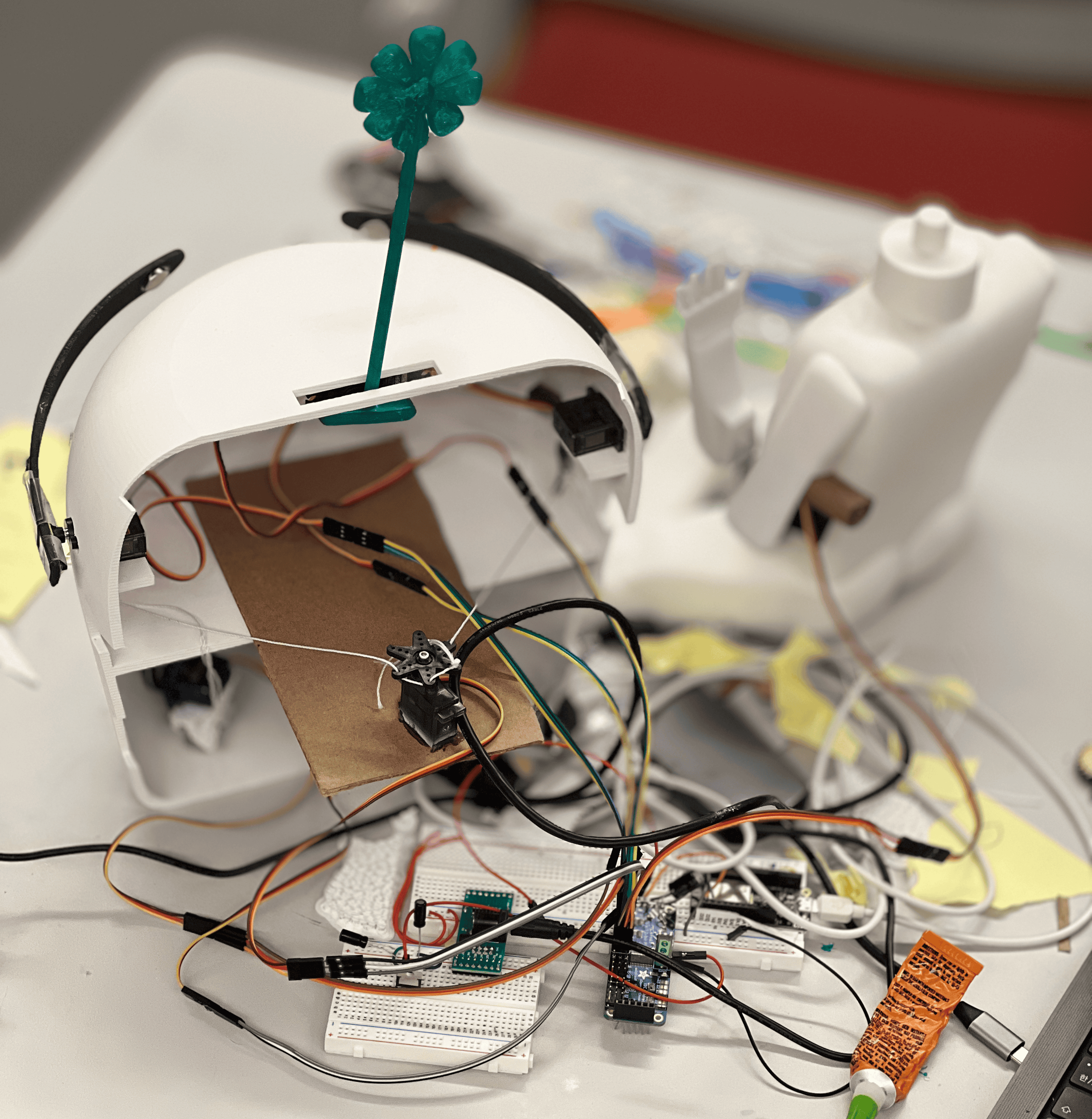

Our first integrated prototype used a 3D-printed head shell to house the servos and internal wiring. However, the first print revealed a major oversight: our internal compartment planning was too tight. Servos jammed against walls, mouth mechanisms couldn’t move freely, and cables created interference.

Servo inconsistency became a recurring issue. Motors stuttered, jittered, or failed to return to neutral positions. To address this, we added a 470µF capacitor to the power rail, which stabilized voltage and dramatically smoothed motion. This change allowed multiple servos to run simultaneously without brownouts or unresponsive behavior.

We also ran into thermal issues. Our original camera, designed for full-color HD output, overheated after prolonged use — disrupting the emotional feedback loop. We switched to a black-and-white USB camera, which ran cooler, provided enough facial contrast for the Hume API, and held up under longer runs.

During this period, we broke multiple servos, mostly from continuous use and stress-testing beyond normal conditions. This prompted a final design change: modular servo mounts and accessible hatches so we could easy replace them. This not only saved time but laid the groundwork for future iterations where kids or therapists might need to make on-the-fly repairs.

Final Prototype

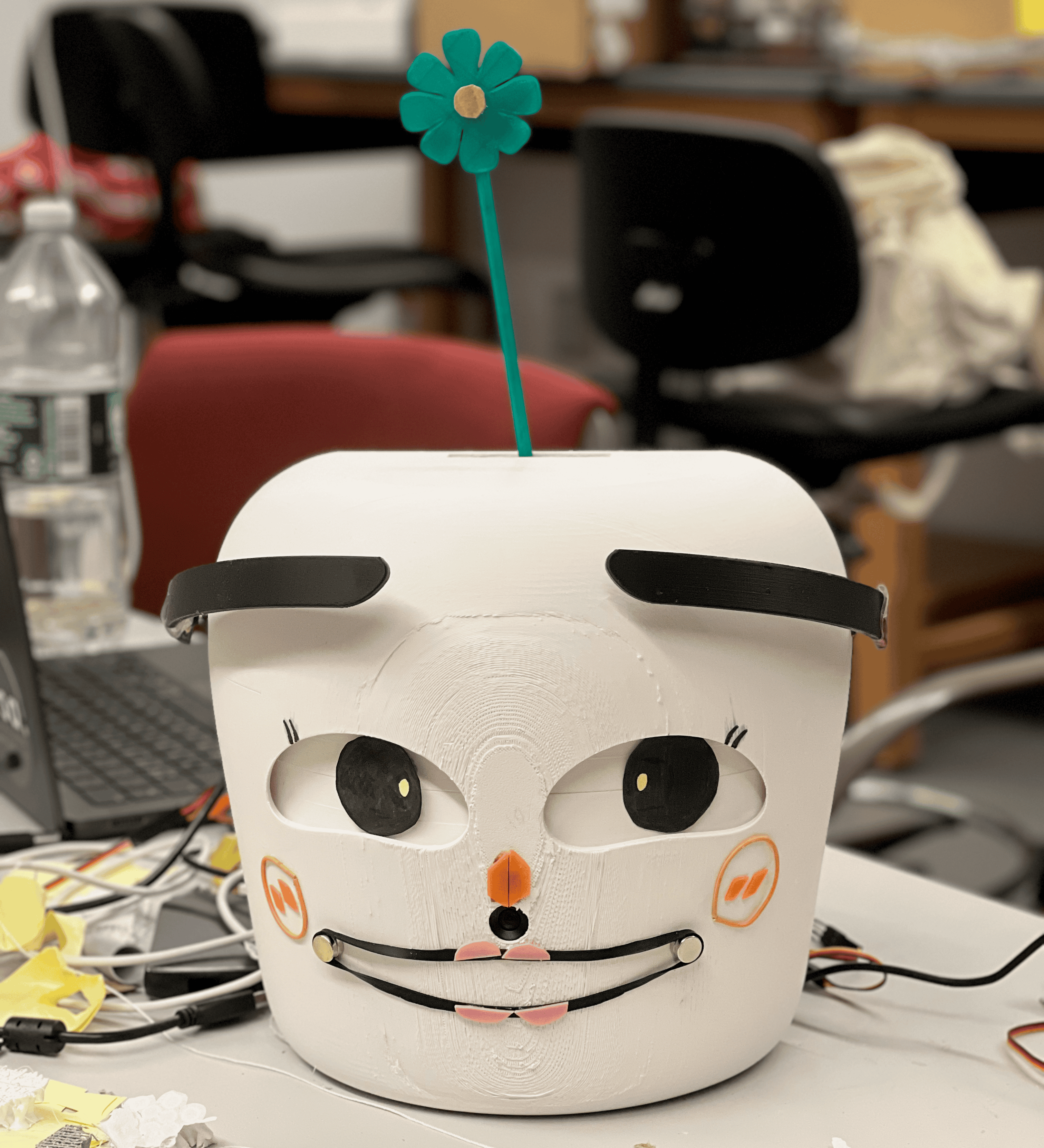

Sprout's final prototype used a PCA9685 PWM driver board to control 7 coordinated servo motors:

- 2 for the eyebrows

- 2 for the mouth corners

- Eye tracking

- Antenna

- Arm gesture

The head was fully redesigned with rounded contours and neutral white coloring to reduce user anxiety and improve approachability. Internally, we restructured the compartments to give each servo a dedicated space, preventing interference and increasing motion range.

TL;DR: Through layered prototyping, real-time emotion detection, and repeated mechanical iteration, we transformed sketches into a fully functioning robot capable of coordinated, expressive behavior.

Final Outcome

Sprout’s final prototype brought together real-time emotional mirroring, servo-driven expressivity, and a thoughtfully approachable design — all working in sync to create a tangible, responsive companion. After weeks of iteration and engineering, we were able to deliver a robot that could detect a user’s facial expression and physically mirror it, using coordinated movements of the eyebrows, mouth, eyes, antenna, and arm.

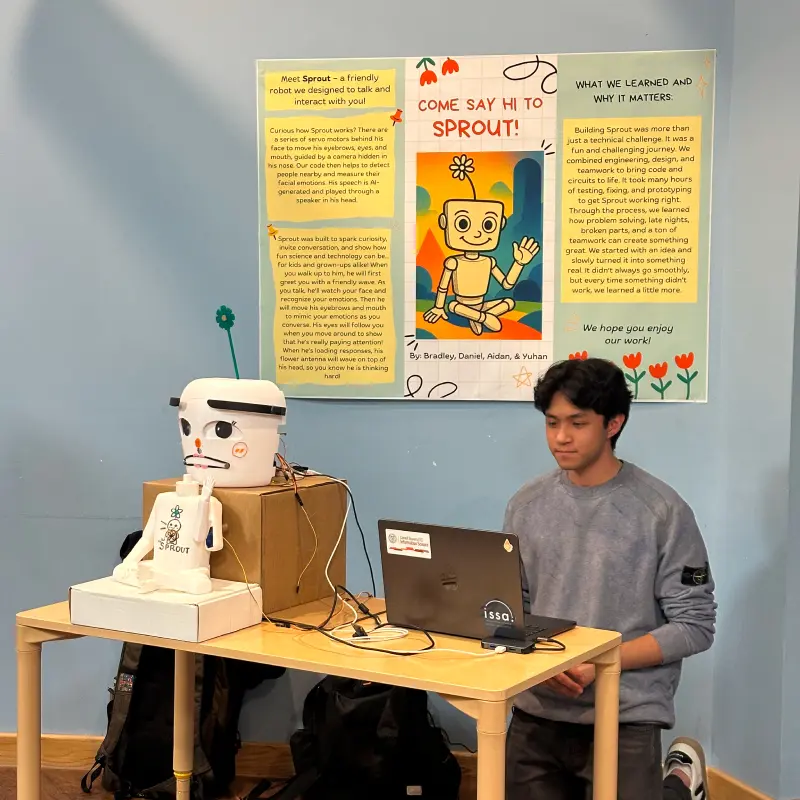

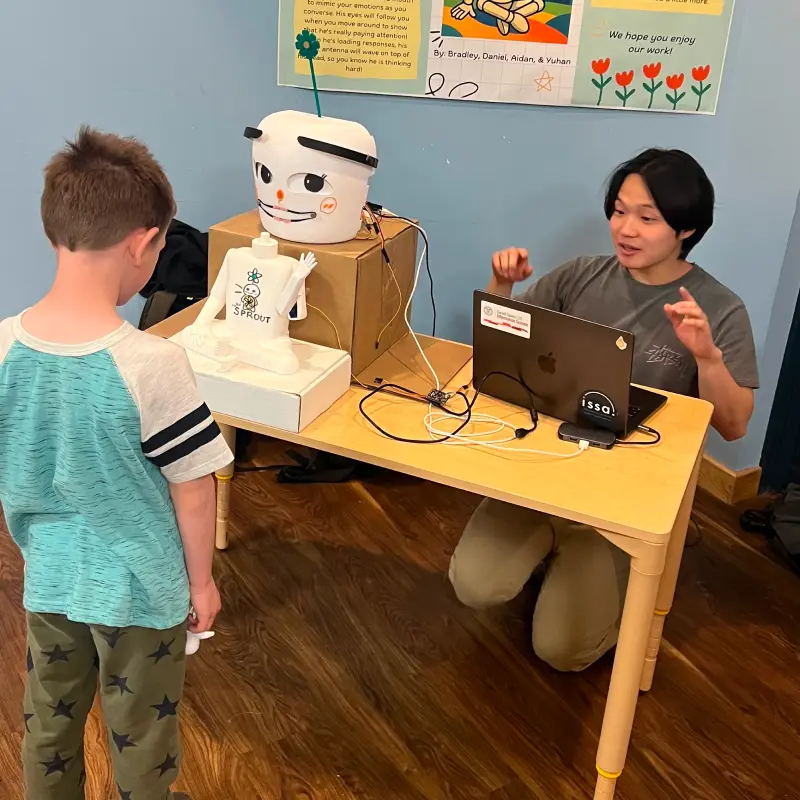

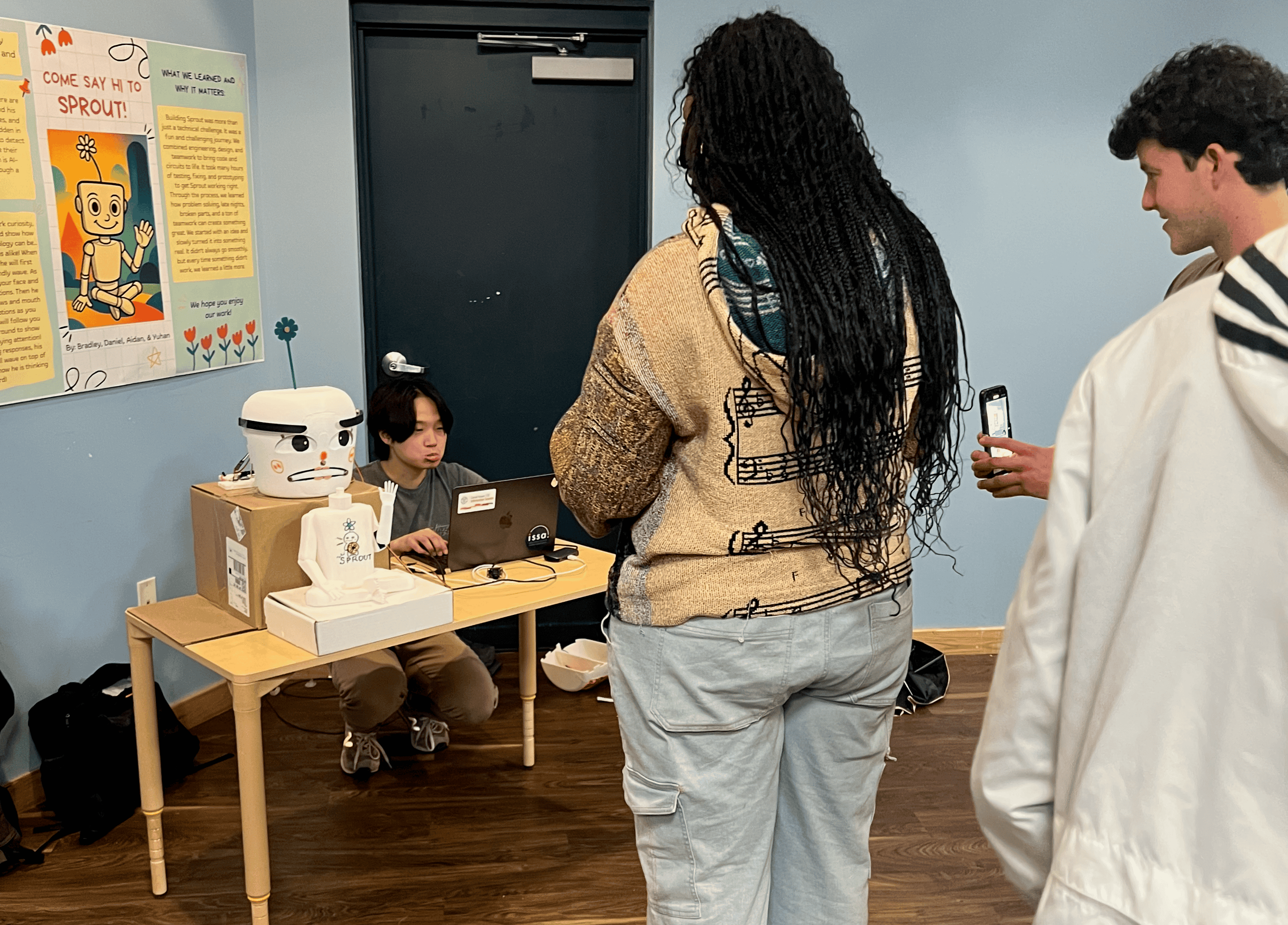

To evaluate Sprout’s impact in a public setting, we showcased the robot at the Sciencenter, a hands-on science museum in Ithaca, NY. The goal was to observe how people — especially children and families — engaged with the robot in an open, unscripted environment. Reactions were immediate and often emotional: kids waved at Sprout, laughed when it mirrored their smiles, and sometimes paused in confusion when it reflected their neutral or sad expressions. Several parents remarked that the robot felt “present” or “attentive” — even though it never spoke a word.

The exhibition setting revealed both strengths and challenges. Sprout’s coordinated expressions created a sense of liveliness, even in short interactions. However, long runs (over two hours) caused the camera to heat up and the servos to drift slightly from calibration. While the robot remained functional, it highlighted the need for more thermal management and power-efficient motion in future iterations.

TL;DR: Sprout successfully interacted with users using live emotional feedback, proving that physical mirroring can foster self-awareness — and revealing insights/challenges for future emotional robots.

Building Sprout taught me that designing for emotional expression goes far beyond mechanics — it’s about timing, subtlety, and coherence. Every facial movement had to be carefully considered not only for function, but for how it contributed to Sprout’s overall emotional presence. We learned that users intuitively sense when expression feels “off,” even if they can’t articulate why — which made alignment between hardware, software, and behavior crucial.

Technically, we gained experience in servo tuning, modular hardware design, thermal management, and real-time data integration. We also saw firsthand how fragile emotional interactions can be: if one servo stuttered or one blink came half a second too late, the illusion broke. This forced us to think like both engineers and performers — coordinating multiple systems to deliver a single, unified gesture.

There were plenty of challenges. Our servos broke repeatedly during testing, the camera overheated during long sessions, and we frequently had to rewire internals just to keep iterating. But these setbacks pushed us toward more durable, repairable, and flexible solutions. The decision to modularize the internal layout, for instance, was a direct result of those frustrations — and became one of the most useful design choices we made.

Looking forward, we see several exciting directions for Sprout. First, we’d like to make the robot more portable and handheld, allowing it to be used in therapy sessions where children can interact with it like a companion. This would require redesigning the internal structure to support battery-powered operation and more compact actuators. Second, we’d explore integrating context-aware behaviors — such as shifting gaze based on voice direction or reacting differently to repeated expressions — to deepen the emotional feedback loop. Finally, better thermal and power management would allow Sprout to operate in longer sessions without overheating or desyncing.

TL;DR: Building Sprout taught me that emotional robotics demands harmony between hardware, software, and expression — and that designing for empathy means designing for subtlety, timing, and repairability.